Originally Posted by

Struan Gray

Hi all :-)

The fundamental reason why you inevitably ratchet downwards in resolution is that the blurs introduced by aberrations and defocus and by diffraction are uncorreleated. The two physical processes spreading the light out are independent and do not influence each other. One just blurs the blurred result of the other, and you always get extra blur, even if only by a little bit.

You can imagine taking some ideal Platonic capture of the image, blurring it a bit to represent aberrations, and then blurring it some more for diffraction. Mathematically the blurring is done with a convolution: imagine taking some of the light in each pixel and spreading it out into neighbouring pixels. Do the same for all the pixels in the image and each new pixel becomes a weighted sum of itself and it's surroundings (there are, of course, analytical approaches which handle the non-digitised continuous analogue case).

The finicky details are in the weightings of the surrounding pixels, or, equivalently, in the kernal of the blurring function. Very, very often, it is assumed that the kernal is a Gaussian bell curve shape. That is because a lot of physical processes do indeed produce a Gaussian shape, but also because it's a good approximation to many other shapes, and because a wonderous piece of maths called the Central Limit Theorem means that combining repeated measurements tends to make the overall shape of the kernal converge to a Gaussian.

There is also laziness and convenience: a Gaussian can be handled analytically, since you can prove all sorts of useful general theorems about how convolutions of Gaussians give new Gaussians with widths which are simply related to the ones you started with. That is where the 1/R2 + 1/R2 rule comes in. In real life things are not that simple: for example, the commonest lineshape for atomic spectra is a Lorentzian, and that doesn't even have a defined variance. You can't come up with a definition of 'width' and you have no choice but to do the convolution explicitly (or cheat, and use a Gaussian).

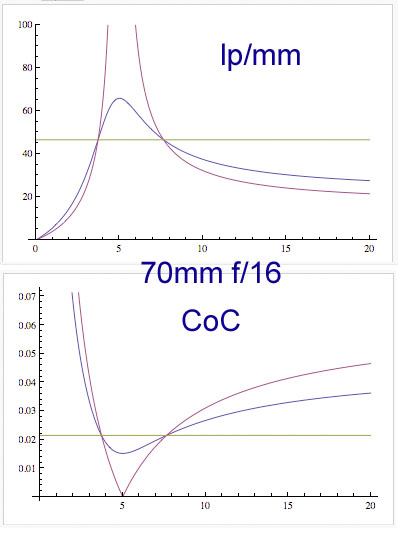

Note that in real life, none of the functions affecting blur in photographs is a Gaussian. Aberrations produce the complex functions seen in spot diagrams, Pure defocus is a simple geometric shape, and diffraction is an Airy sinc function (for a circular aperture). There is no reason whatsoever to assume that the combination of blurs should follow a 1/R2 rule.

MTFs come in because they are one part of the Fourier Transfer of the error kernal. Another useful theorem says that instead of convolving two functions (which is time consuming, even for a computer) you can instead just multiply their Fourier Transforms together. Thus combining errors, or adding the effects of multiple blurring mechanisms, becomes a simple matter of multiplying the MTFs. The only issue is that you need to keep track of phase, and MTFs only handle magnitude - in 'real' calculations you use the full Optical Transfer Function, which includes phase.

Reply With Quote

Reply With Quote

Bookmarks